Big O notation is a mathematical concept used in computer science to analyze algorithm efficiency. It originated in mathematics but gained prominence in computer science in the 1970s, with Donald Knuth’s work. It simplifies the comparison of algorithms by quantifying their worst-case performance as input size increases.

For instance, consider linear search (O(n)) versus binary search (O(log n)). In linear search, each element is checked sequentially, resulting in linear growth in runtime with input size. Conversely, binary search halves the search space with each iteration, resulting in logarithmic growth.

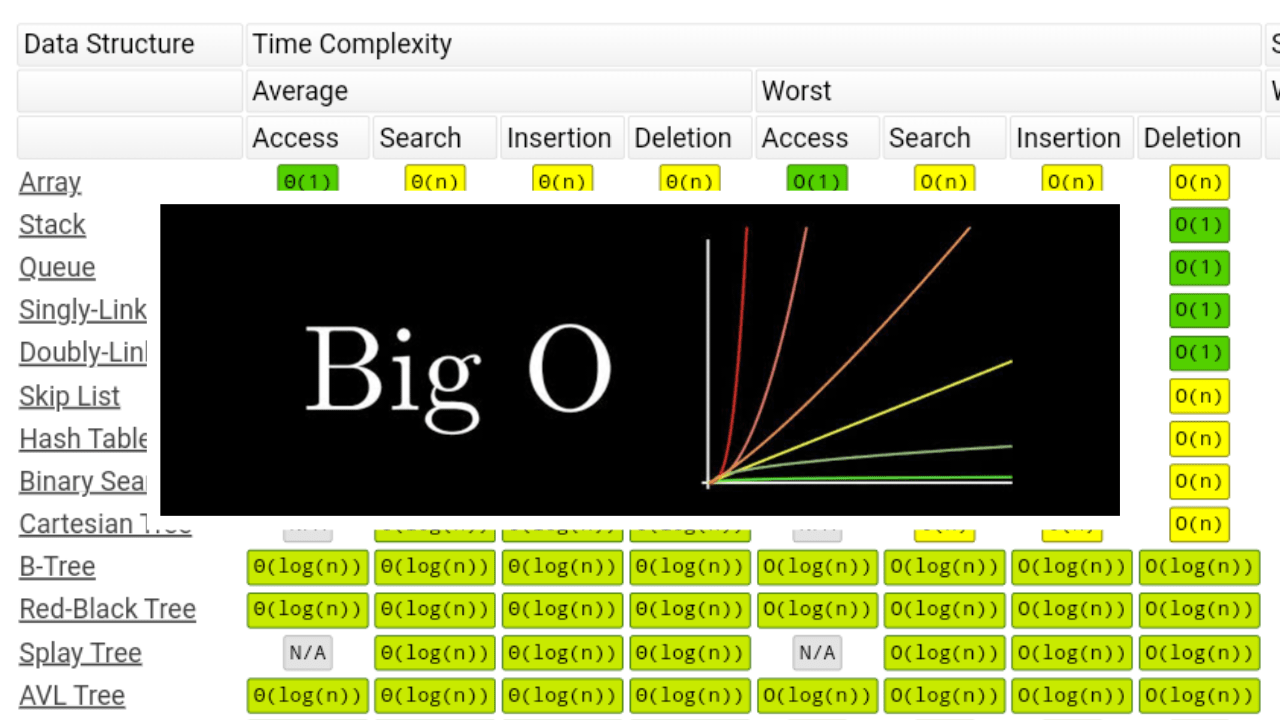

Big O notation categorizes algorithms based on their scalability. O(1) represents constant time, like retrieving an element by index. O(n) signifies linear time, like finding the maximum element in an array. O(n log n) denotes linearithmic time, as seen in efficient sorting algorithms. O(n^2) reflects quadratic time, as in bubble sort.

Understanding Big O notation aids in algorithm selection and optimization, crucial for designing efficient software systems. It considers factors like time and space complexity, guiding developers to make informed choices when tackling various computational problems.

Big O Notation – Explained In 200 Words